- Promptstacks

- Posts

- 8 Prompting Techniques You Need to Learn (No BS!)

8 Prompting Techniques You Need to Learn (No BS!)

💯 The Top Prompt Engineering Techniques

[ 4 minute read ]

You may or may not have heard about prompt engineering. Essentially it’s ‘communicating with an AI model to get the output you want’.

Most people don’t know how to engineer good prompts.

However, it’s becoming an increasingly important skill…

Because garbage in = garbage out.

Here are the most important techniques you need for prompting 👇

I’ll refer to a language model as ‘LM’.

Examples of language models are @OpenAI’s ChatGPT and @AnthropicAI’s Claude.

1. Persona/role prompting

Assign a role to the AI.

Example: “You are an expert in X. You have helped people do Y for 20 years. Your task is to give the best advice about X.Reply ‘got it’ if that’s understood.”

A powerful add-on to the prompt is the following:

‘You must always ask questions before you answer so you can better understand what the questioner is seeking.’

I’ll talk about why that is so important in a sec.

2. CoT

CoT stands for ‘Chain of Thought’

It is used to instruct the LM to explain its reasoning.

Example:

ref1: Standard prompt vs. Chain of Thought prompt (Wei et al.)

3. Zero-shot-CoT

Zero-shot refers to a model making predictions without additional training within the prompt.

I’ll get to few-shot in a minute.

Note that usually CoT > Zero-shot-CoT

Example:

ref1: Zero-shot vs zero-shot-CoT (Kojima et al.)

4. Few-shot (and few-shot-CoT)

Few-shot is when the LM is given a few examples in the prompt for it to more quickly adapt to new examples.

Example:

ref2: Few-shot vs. few-shot-CoT (Kojima et al.)

5. Knowledge generation

Generating question-related knowledge by prompting an LM.

This can be used for a generated knowledge prompt (see further).

Example:

ref3: Knowledge generation (Liu et al.)

6. Generated knowledge

Now that we have knowledge, we can feed that info into a new prompt and ask questions related to the knowledge.

Such a question is called a ‘knowledge augmented’ question.

7. Self-consistency

This technique is used to generate multiple reasoning paths (chains of thought).

The majority answer is taken as the final answer.

Example:

ref4: Self-consistency (anonymous authors)

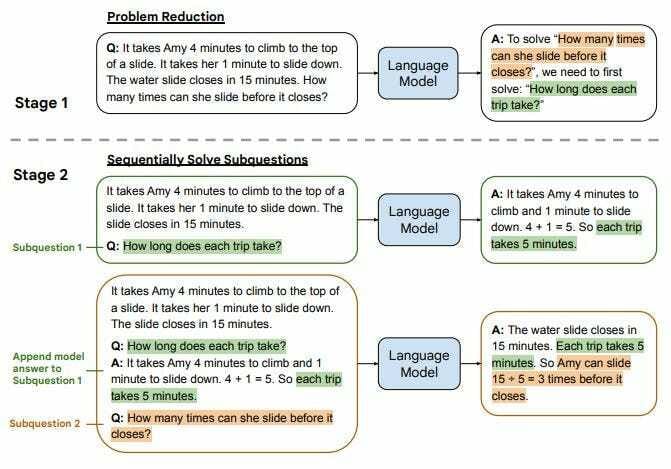

8. LtM

LtM stands for ‘Least to Most’

This technique is a follow-up on CoT. Additionally, it works by breaking a problem down into subproblems and then solving those.

Example:

ref5: Least to most prompting (Zhou et al.)

Bibliography:

ref1:

Wei, J., Wang, X., Schuurmans, D., Bosma, M., Ichter, B., Xia, F., Chi, E., Le, Q., & Zhou, D. (2022). Chain of Thought Prompting Elicits Reasoning in Large Language Models.

ref2:

Kojima, T., Gu, S. S., Reid, M., Matsuo, Y., & Iwasawa, Y. (2022). Large Language Models are Zero-Shot Reasoners.

ref3:

Liu, J., Liu, A., Lu, X., Welleck, S., West, P., Bras, R. L., Choi, Y., & Hajishirzi, H. (2021). Generated Knowledge Prompting for Commonsense Reasoning.

ref4:

Wang, X., Wei, J., Schuurmans, D., Le, Q., Chi, E., Narang, S., Chowdhery, A., & Zhou, D. (2022). Self-Consistency Improves Chain of Thought Reasoning in Language Models.

ref5:

Zhou, D., Schärli, N., Hou, L., Wei, J., Scales, N., Wang, X., Schuurmans, D., Cui, C., Bousquet, O., Le, Q., & Chi, E. (2022). Least-to-Most Prompting Enables Complex Reasoning in Large Language Models.

Top AI videos 🧠

Top ChatGPT Plugins - Click here

Top ChatGPT Productivity Work Tips - click here

Editors Double Product Pick 📺

My Story Elf

Create Incredible Stories Your Kids Will Love.

Free GPT

A Completely Free browser extension that lets you easily proofread, edit, and track changes to your content in chatGPT.

Make Me A Midjourney 🤛

Star Wars In the Ghibli-verse

Teach me how — Midjourney

Reddit Spotlight 🔦

Photoshop AI Used For It’s Intended Purposes

Are you happy with this newsletter? |